Where is your website traffic coming from?

If you’re relying on people to find you through a Google search, search engine optimization (SEO) needs to be at the top of your priority list.

You won’t be able to generate leads for your business if nobody can find you.

Did you know that most experiences on the Internet start with a search engine? What happens after someone makes a search?

The top result on Google has a higher chance of getting a click than any other. That means if you’re not number one on the page, you just missed out on potential traffic. The reason why other websites are ranking higher than you on Google might be because they are making a conscious effort to improve their SEO.

Fortunately, it’s not too late for you to get started.

There are certain things you can do to increase your chances of getting ranked higher on Google searches.

Improving your SEO can be really hard. I’ve been doing this stuff for well over a decade and I still have to work really hard at it. Nothing comes easy with SEO, at least not for long.If you want help from some real pros, we recommend working with Webimax. They’re the real deal, they’ll do good work and won’t do anything sketchy or waste your money.

11 Steps to Improve SEO Rankings

We’ve identified the top ways to improve your SEO ranking. Here they are.

- Improve your page loading speed

- Produce high quality content

- Optimize your links

- Optimize your site for mobile devices

- Properly format your page

- Encourage sharing on social media

- Use keywords appropriately

- Create clean, focused, and optimized URLs

- Get your name out there

- Get set up on Google Business

- Use tools to outperform content marketing

Step 1 – Improve Your Page Loading Speed

Your page loading time is important for a few reasons.

First of all, if your load speed is too slow, Google will recognize this, and it will harm your ranking.

But a slow website will also impact the way your website visitors engage with your pages.

As a result, those negative interactions will hurt your ranking too.

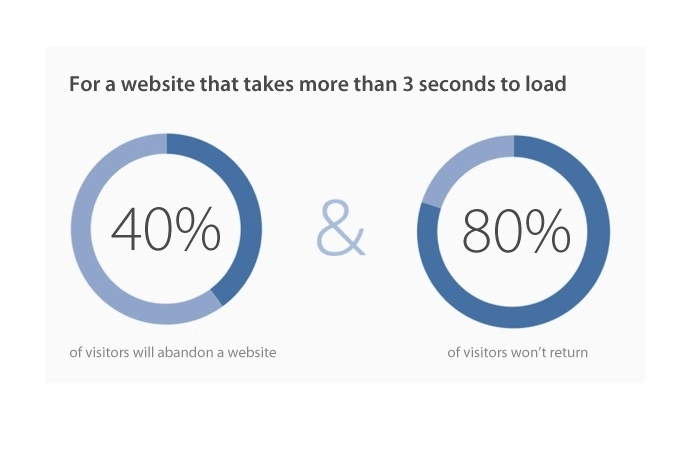

Look at how abandonment rates increase for websites with long page loading times:

How slow is too slow?

Research shows 40% of visitors will abandon websites if the page takes longer than 3 seconds to load.

What’s even more shocking is that 80% of those visitors won’t return to that website.

This is terrible for your SEO ranking because it ultimately kills traffic to your site.

But on the flip side, if your page loads fast, people will keep coming back.

Google’s algorithm will recognize your website’s popularity and adjust your search ranking accordingly.

This makes it extremely important to optimize both your page speed and server response time.

If you want to test the speed of your website, there are online services such as Pingdom available for free.

This will allow you to test your website from different locations all over the world.

If you find that your site is running slow then you may want to check your website theme and plugins.

If your slow server is the culprit then check out my list of the best web hosting providers and transfer to a new host!

Step 2 – Produce High Quality Content

How often do you update your website?

If you haven’t touched it since the day you built it, you probably don’t have a great SEO ranking right now.

To drive more traffic to your website and increase its popularity, you need to give visitors a reason to keep coming back.

Your content needs to be high quality, recent, and relevant.

Another factor that impacts your SEO ranking is so-called dwell time. This relates to how much time people spend on your website per visit. If your site has fresh, exciting, or newsworthy information, it will keep visitors on your page longer and improve your dwell time. Websites that provide highly informative content typically have long dwell times.

Here’s something else to consider.

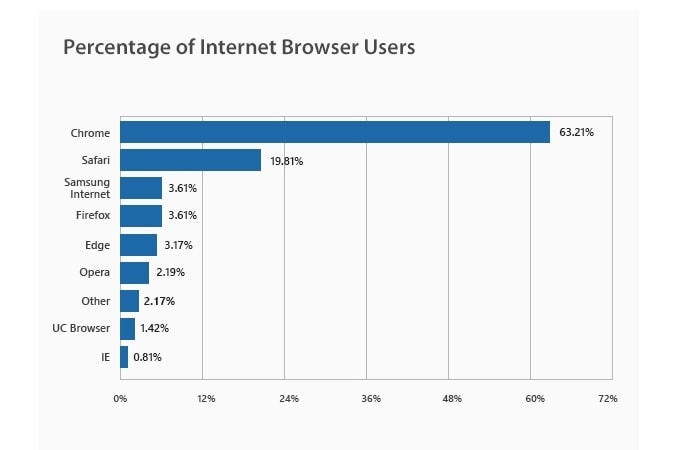

Google Chrome controls more than 63% of the Internet browser market share, making it the most popular browser in the world.

When users bookmark your website from a Google Chrome browser, it can help your SEO ranking.

High quality and relevant content will increase the chances of your website being bookmarked by visitors.

Optimize Your Images

Pictures and other images are great for your website. But you need to make sure they are optimized properly if you want these images to improve your SEO ranking.

We are referring to factors such as the file format and size.

Huge images can slow your page loading time, which hurts your ranking. Resize or compress your images to optimize them. You can also use your images to sneak in keywords by naming them accordingly.

For example, let’s say you have a website that sells toiletries or other bath products.

Instead of naming an image something like “shampoo1,” you could name it “best shampoo for long hair.”

You can also strategically use keywords in the title of your image as well as the caption or description.

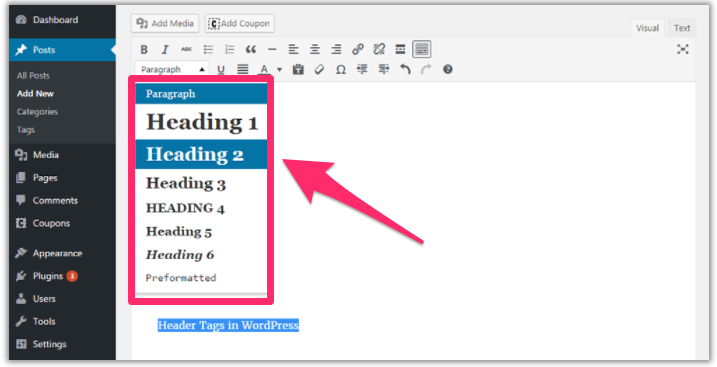

Break Up Your Content With Header Tags

Headings are another way to help improve the user experience on your website.

They break up the content and make it easier to read or skim.

Plus, headers make everything look more appealing, which is always beneficial.

If your website is just a wall of text, it’s going to discourage people from spending a long time on it.

As a result, your SEO ranking will suffer.

If you’re running your site on WordPress, you can easily change the header tags.

We use header tags for all of our websites and blog posts.

If you’re not utilizing this tool, we highly recommend you start ASAP.

Start Blogging

Blogging is great for your business.

It’s an outstanding tool for lead generation and helps you engage with visitors to your website.

But what most people don’t realize is blogging also improves SEO rankings.

Here’s why.

As we have already mentioned, producing fresh, updated, and relevant content can drive people to your website and give them a reason to stay on your pages for a while.

Well, blogs are the perfect channel for you to accomplish this.

If you can establish a large group of faithful readers, you can get lots of traffic to your site on a daily basis.

Plus, you can incorporate other things I talked about so far into your posts as well, such as images and header tags.

Other elements, such as links, increased readability, and keywords, can also be incorporated into these posts. I will talk about them shortly.

Understanding everything related to blogging positively impacts your search engine ranking.

Add More Than Text

The content on your website shouldn’t be only written words.

As I said earlier, pictures are great too, but there’s more you can add to improve your SEO ranking.

Consider adding other multimedia elements such as videos, slideshows, or audio to your site.

All of this can help improve the user experience.

Why?

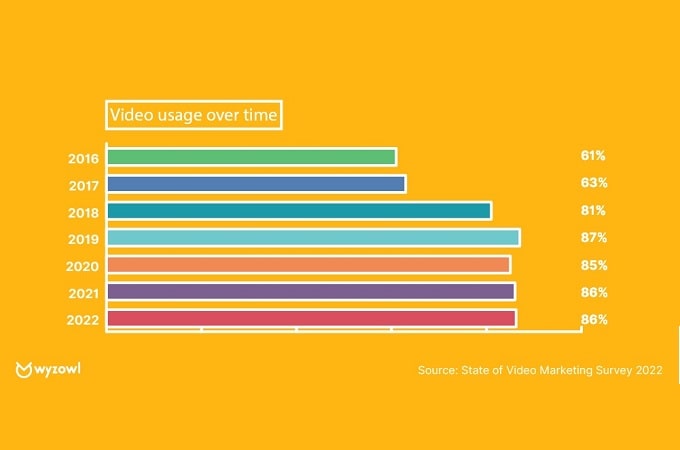

For starters, consumers want to see more videos. And businesses are responding, with many of them using video as a marketing tool, continuing a steady trend.

It’s much easier to watch something than read about it.

But there’s a direct correlation between videos and other multimedia sources on your website, and it’s SEO ranking.

These features can dramatically improve the amount of time someone spends on your website.

Depending on the length of your videos, people could be on your page for several minutes.

If that happens, it will definitely boost your search ranking.

Use Infographics

Infographics pack a huge SEO punch. At Kissmetrics, we used infographics to generate over 2 million visitors and 41,142 backlinks. And our proven infographic strategy is still flourishing:

For your infographics to be super powerful, you need two things: great design and great content. Don’t overlook the content when creating infographics. Lots of people do, and guess what? Their infographics don’t perform well.

We also recommend coupling your infographic with at least 2,000 words of high-quality content. That’s because Google won’t index the text on the infographic itself, so writing long-form content will give you extra ranking power.

One last trick on infographics. Make them move!

One of the coolest infographics we’ve seen is this one on cheetahs. What’s cool about it isn’t the data. It’s how the data and visualizations move within the graphic.

So, how successful was this concept? Let’s just say 1,170 websites link to it. Not too bad for one infographic.

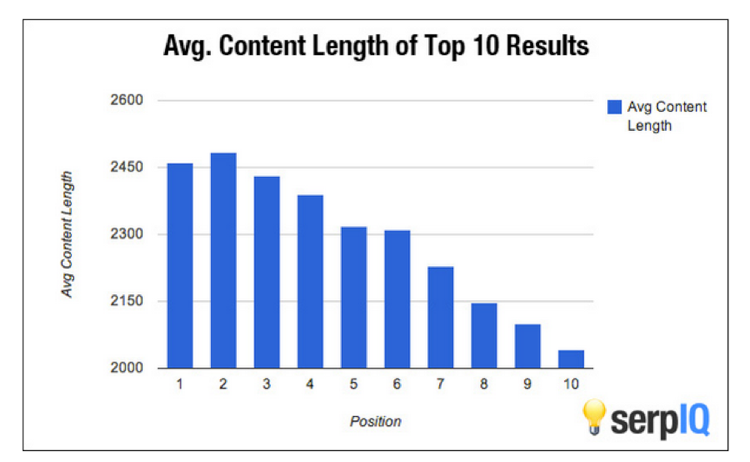

Turn a Standard Post Into a Long-Form post

We are not going to insult your intelligence by stating the obvious fact that long-form content ranks better than, say, a typical 500-word post.

You already know that.

But here’s a nice little trick you can do with thin content.

Look for a shorter post, under 1,000 words, that’s pretty good but never lived up to its full potential.

Then spend 30 minutes “beefing it up” by adding more content, charts, graphs, visuals, etc. until it’s bona fide long-form content.

Make Sure Your Site is Readable

Keep your audience in mind when you’re writing content on your website.

If you want people to visit your site and spend time there, speak in terms they can understand.

Don’t try to sound like a doctor or a lawyer (even if you are one).

Your content should be written in a way the majority of people can understand.

Not sure if your content is readable?

You can use online resources to help.

One of my personal favorites is Readable.com.

Tools like this can help you identify words that might be too long or difficult for people to comprehend.

Step 3 – Optimize Your Links

There are certain things you can do to increase the credibility of your website.

Sure, you can make claims, but it looks much better if you back them up.

All of your data claims should be linked to trustworthy and authoritative sources.

As you can see from what you’ve read so far today, we do this ourselves.

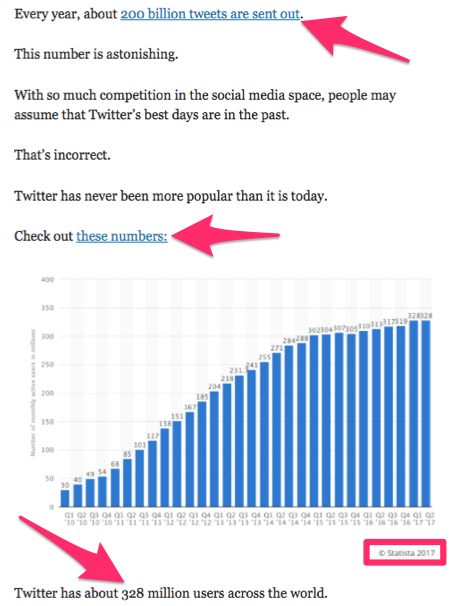

But here’s another example that illustrates what we’re talking about from a blog post we wrote about generating leads on Twitter:

All of our facts are citations from authority sources.

And we made sure to use outbound hyperlinks to those websites.

You should not only link to authority sites but also make sure all the information is recent.

Notice that the graph we used in the example above is from 2017.

Outbound links to resources from 2009 are irrelevant and won’t be as effective for your SEO ranking.

You should also include internal links.

These links will direct visitors to other pages on your website.

We used this technique in the first sentence of this section.

If you scroll back up and click on the link, you’ll get redirected to another Quick Sprout blog post.

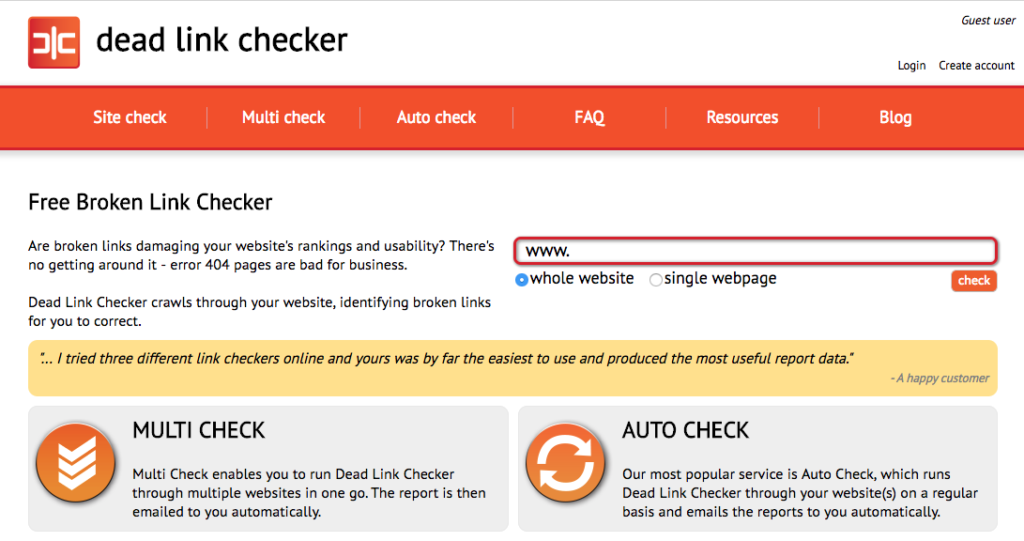

Fix Any Broken Links

If you’re using authority websites for hyperlinks, you shouldn’t have to worry about the links breaking.

But it can still happen.

Broken links can crush your SEO ranking.

Plus, it doesn’t look good when a link you provide to your visitors brings them to an invalid website.

You can use tools like Dead Link Checker to search for links with errors on your website:

You can use this to check your entire website or specific pages.

If you sign up, you can also set up your account to get checked automatically.

Anytime a link goes dead, you’ll be contacted right away so you can replace it.

You can also use this resource to monitor other websites relevant to your industry.

How can that help your SEO?

Well, if a link goes dead on another website, you can notify the webmaster of that page and ask them to replace the dead link with a link to your website instead.

You’re doing them a favor by letting them know about a problem with their site, so they might be willing to do you a favor in return.

This will drive more traffic to your website. Outbound links from other websites to your page will help improve your SEO ranking too.

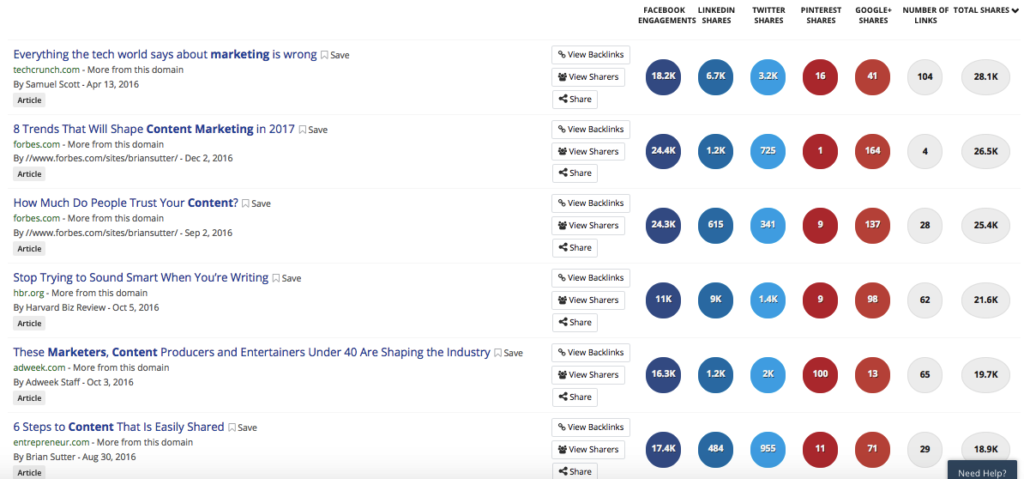

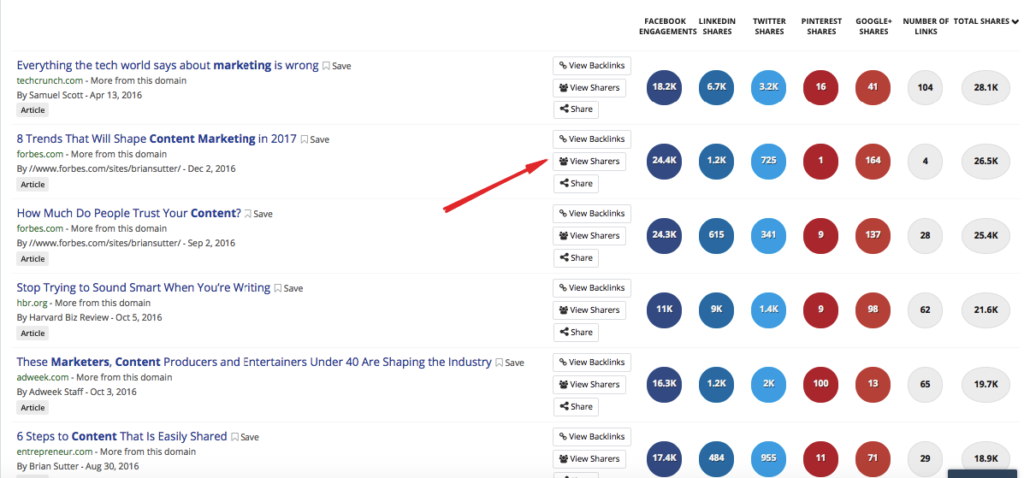

Find Link Opportunities on BuzzSumo

You can use the same process with BuzzSumo.

Just enter your search phrase, and you’ll get a list of results.

Here’s what I get with “content marketing:”

From there, click on “View Sharers” on any articles that interest you.

You’ll then see a list of people who shared that article.

These can all be potential people with whom you may want to form relationships, which could eventually translate into link-building/guest-blogging opportunities.

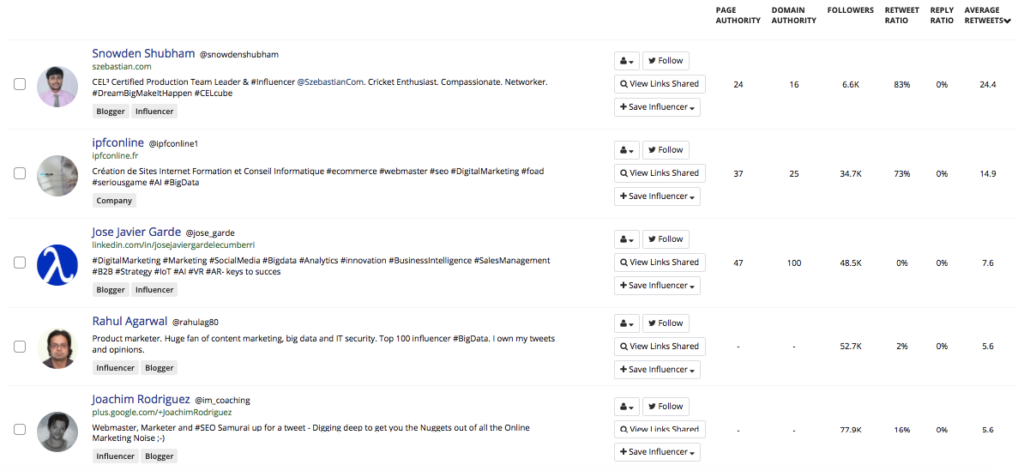

Diversify Your Links

There are a lot of different types of links you can get such as blog roll links, homepage links, links from blog posts, directory links, educational links, footer links, etc. SEOs have a tendency to build only one, instead of each, of these types of links.

If you want to rank high, you can’t just focus on one type of link building method such as directory links.

Instead, you need to get links to your site from blogs, directories, and sometimes from the homepages of other sites. Just make sure whatever links you are building are also relevant as those links tend to have the biggest impact.

For example, with Quick Sprout, we have a variety of sites linking to us, including news sites, blogs, and educational websites. The diversification of links coming into the site is what partly accounts for over 50% of my monthly traffic from Google.

If Links Are Hard to Find, Think Laterally

In some niches—such as marketing, recipes, and entertainment niches, for example—it’s very easy to get links.

There are hundreds of thousands of blogs that are willing to link to you if you make a good case.

But in some niches, those blogs just don’t exist.

That’s when you need to get creative.

One very effective strategy is to get links from related niches.

For example, if you’re a plumber, related niches would be:

- home DIY

- home decor

- beauty/life (e.g., a proper way to unclog sinks or prevent clogs)

Basically, think of any other niche that you can add your expertise to.

Then, all the typical SEO tactics come back into play: guest posting, forum posting, etc.

Let’s go through an example.

Let’s say that you’re a home decorator.

One related niche is home buying and owning, which has a different audience from your typical home decor enthusiasts.

You could write about how home decor could add value to your home. In fact, that turns out to be a good long-tail phrase:

What could you do with this?

You could create content for your own site and then reach out to home buyer/owner blogs asking for a link. That’s a standard SEO tactic.

Alternatively, you could use the idea for a guest post on a popular site.

Not only will it rank for the long-tail keyword that you target (sending you continuous traffic), but it’ll also send you a lot of immediate referral traffic from the site you post on.

Start by thinking of as many related niches as you can, then generate as many ways as possible to add value to those niches.

Step 4 – Optimize Your Site For Mobile Devices

As we’re sure you know, mobile use is on the rise.

It’s rising so fast that it’s actually overtaken computers and laptop devices.

In fact, most Google searches come from mobile devices.

Obviously, Google recognizes this and ranks sites accordingly.

Your website needs to be optimized for mobile users.

There’s no way around this.

If your site isn’t optimized, it’ll hinder the user experience, adversely affecting your ranking. And these days, any website builder will be mobile optimized out of the box like Hubspot’s free blog builder.

Create a Mobile App

You probably think we are crazy, but hear me out: creating a mobile app can help bolster your SEO.

Granted, this is a rather expensive option, but it’s also an investment.

How exactly can an app boost your SEO? Google is now indexing apps on Google search with Firebase App Indexing.

When people are searching for keywords in your niche, they could find your app, and that creates some juicy SEO.

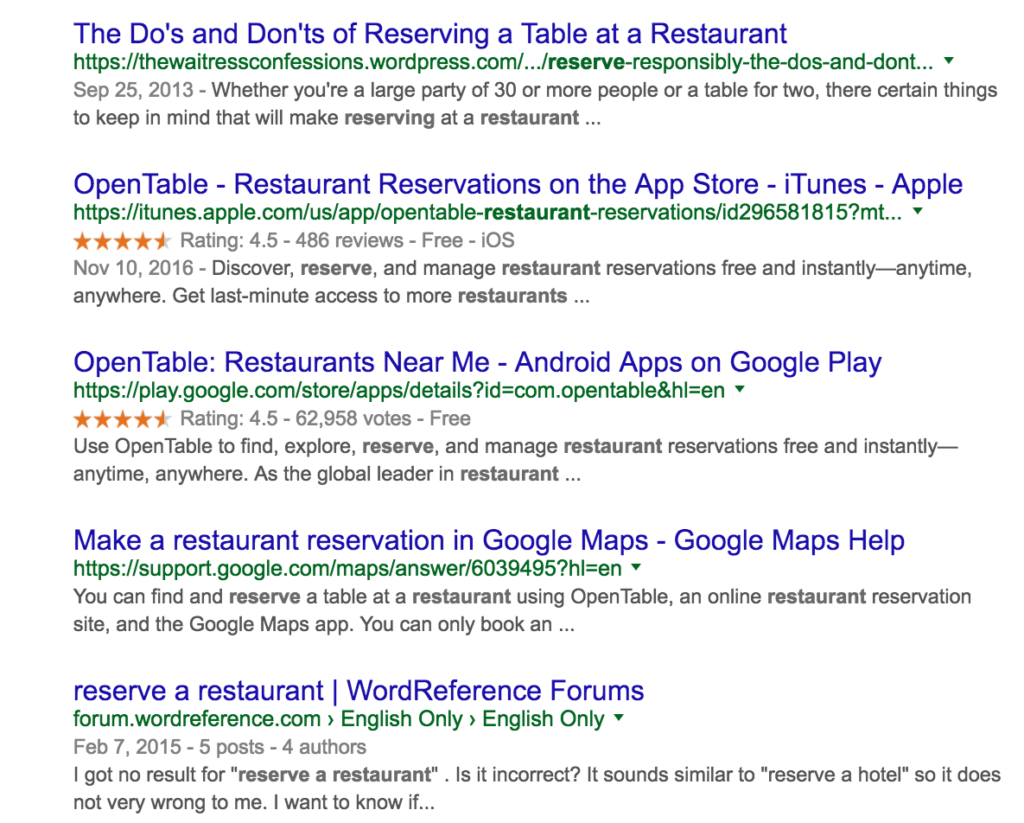

For example, when you search “reserve a restaurant” on Google, you’ll find an app on page one:

When your app pops up on a Google search, it automatically becomes a valuable resource.

But back to the main point—you can see the benefits of having a high-ranking app on Google. Yes, it’s costly, but it’s so worth it.

Step 5 – Properly Format Your Page

Take your time when you’re coming up with a layout for your website.

It needs to be neat, clear, organized, and uncluttered.

Consider things like your font size and typography.

Use colored text, bold font, and italics sparingly.

Things such as bullet points and checklists make it easy for visitors to scan through your content.

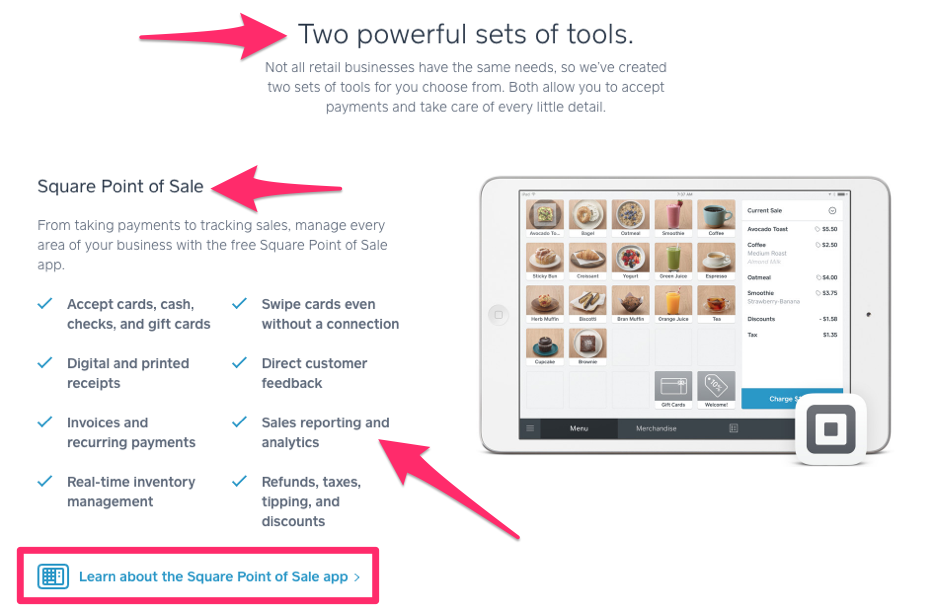

Take a look at this example from Square:

It’s super clean.

They’ve got a simple picture and reasonable amount of text.

The way the text is formatted makes it easy for people to read, especially with the bullet points.

As you can see, Square also included different header tags and subheadings on their page.

If your website is cluttered with too many pictures, advertisements, colors, and blocks of text, it can appear untrustworthy.

Your site architecture and navigation also fall into this category.

A clean format and design will improve your SEO ranking.

Provide Appropriate Contact Information

Speaking of appearing untrustworthy, have you ever struggled to find the contact information of a business on a website?

We know we have.

This should never happen.

All your contact information should be clear and in plain sight for people to find.

The worst thing that could happen is for people to start reporting your website just because you forgot to include your phone number, email address, and location.

This will crush your SEO.

Step 6 – Encourage Sharing on Social Media

Every business and website needs to be active on social media.

That’s pretty much common knowledge.

But what’s not as well known is that you can get your SEO ranking improved if people share links to your website on social media.

Here’s an example from a pest control website case study in which they ran a campaign specifically designed to increase social sharing:

The infographic was shared 1,117 times in just two weeks.

During those same two weeks, the website’s organic search traffic rose by 15%.

As a result, their SEO ranking improved as well.

And that was just over a couple of weeks.

Imagine the results you’ll see if you encourage social sharing as a regular part of your SEO campaigns.

One of the best ways to do this is by including social sharing icons on all your content.

You should also share links on your social media pages.

When that information appears on people’s timelines, all it takes is just one click for them to share it.

Step 7 – Use Keywords Appropriately

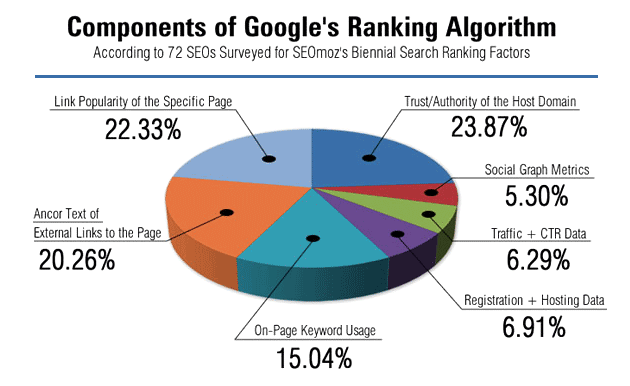

Take a look at the components of the Google ranking algorithm:

Keywords play a major role in this formula.

You want to include words people will search for throughout your content.

But do it sparingly.

If you go overboard saturating your website with keywords, Google will pick up on this, and it will have an adverse effect on your ranking.

Keywords should fit naturally into sentences.

Include them in your header tags and even in image captions.

You should also use long-tail keywords, which are three or four word phrases that could be found in a search.

For example, someone probably won’t just search for the word “phone” when they’re looking for something.

But they may type in the phrase “best phone for texting” as an alternative.

If your keywords match their search, your website will have a greater chance of getting ranked higher.

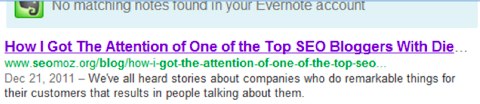

Write Click-Worthy Titles and Descriptions

When it comes to writing titles for search engines, the first thing you have to know is this…you only have 65 characters to write your headline.

You could write the greatest headline, but if it’s over 65 characters, it will get cut off. This is what you will see:

Fortunately, the most important part of the headline is saved, but the rest is cut off. So keep it short.

Here are some other tips to keep in mind when creating click-worthy titles:

- Front-load your titles with keywords – You should front-load all of your keywords in your titles. People will typically only scan the first two words of a title.

- Keep it predictable – Your title should click-through to a page that meets the expectations of the user.

- Clear – The reader should know what your webpage is about in 65 characters or fewer.

- Make it emotional – Emotional titles are said to have a stronger impact on readers.

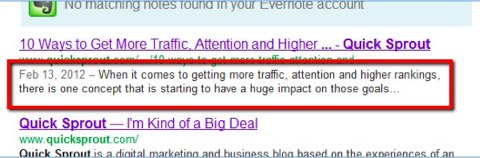

Write a Great Meta Description

The meta description is the next element you must optimize.

If you view the source code, the tag looks like this:

<META NAME=“Description” CONTENT=“informative description here”>

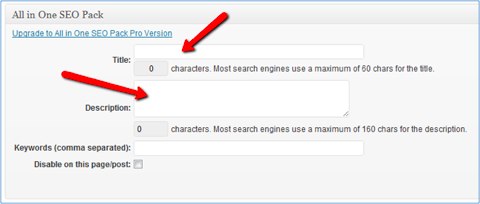

If you use a WordPress plugin like All-in-One SEO Pack, you’ll get this form at the bottom of your blog editor:

Google has made this easy by giving you tips on how to create good descriptions. Here are the two most important:

- Make them descriptive – Front-load keywords that are relevant to the article. If you like formulas, ask “Who? What? Why? When? Where? How?” That’s a formula journalists use to report. It works equally well when writing descriptions.

- Make them unique – Each meta description should be different from other pages’ descriptions.

- Make them short – Google limits meta descriptions to 160 characters or fewer.

While meta description is not as important your heading is when it comes to getting clicks because people don’t seem to pay nearly as much attention to the description, it is still important from an SEO ranking perspective. So don’t ignore it!

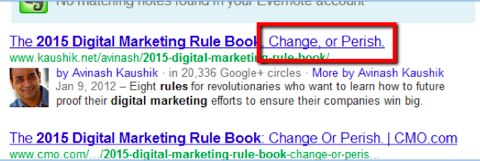

Step 8 – Create Clean, Focused, and Optimized URLS

While your title tag needs to be emotional, your URL doesn’t. Let us show you what we mean.

Here is an example that Dan Shure used:

Avinash’s title tag is optimized for SEO and click rates. It’s optimized for SEO because of the keywords “digital marketing,” and it is optimized for click rates because of the words “change or perish,” which are very emotional, wouldn’t you agree?

His URL, however, does not include “change or perish.”

It doesn’t need to because it is only ranking for “digital marketing.”

How might you change this title tag to optimize it further? I’d rewrite it like this: “Digital Marketing: 2015 Rule Book. Change or Perish.”

That way you move the two keywords up front.

Use Latent Semantic Indexing (LSI)

Although latent semantic indexing (LSI) is very powerful, not very many sites are using it. And that’s a shame because LSI can give your site a serious SEO boost.

LSI is the process search engines use to find related keywords in addition to your main keywords. In other words, LSI finds synonyms for keywords.

For example, if you’re writing an article about Facebook, you’d include “social media network” as an LSI keyword.

Let’s take a look at LSI in action. When you Google “best laptop,” one of the first pages that pops up is this post from The Verge:

Right away, you can see that “best laptops” is one of the post’s keywords.

You can see other keywords and phrases sprinkled throughout, such as “portability”. And sure enough, when you Google “laptop portability”, the post appears on page 1.

This article is a great example of how LSI can improve your SEO. Take it for a spin, and you’ll see just how effective it can be.

Sniff Out Unnecessary Code

Code is good, but you know what they say about having too much of a good thing.

In this case, if your site has excess code, search engines will take longer to crawl your site, which is bad. On-page JavaScript and CSS are among the main offenders here.

Your code should be as lightweight as possible. This will also help your page load time, and a faster load time means better SEO.

Step 9 – Get Your Name Out There

When we started out in the SEO world, we would manually build links, and it would take us months, if not years, to see good increases in rankings because our sites lacked links from authority sites.

But one day, we launched a site in the podcast space, and it got covered by sites like TechCrunch. Within weeks of the coverage, the site started to rank on page 1 for competitive terms in the podcast space.

It was then that we realized the power of press. From then on, we always got press for each of our companies, which helped them rank higher and faster.

So, how do you get press? A simple way is to pay a PR agency like PR Serve, who has a performance-based pay model, allowing you to pay it only when it gets you press.

A cheaper way to get press is to build relationships with reporters. By continually helping them out with their stories and giving them feedback, you will increase your chances of them eventually being interested in covering your company.

Or, if you want to go for the cold approach, which is harder but still works, you can always follow this PR email pitch template.

Join Question and Answer Sites

People are hungry for knowledge. That’s why so many people post on Q&A sites like Yahoo! Answers and Quora.

But there aren’t many answers out there. That’s where you come in.

The trick is to compose a thorough, well-written answer to someone’s question, preferably a question that doesn’t have many answers. You need to write the best answer possible and include links to your site when it’s relevant. (Don’t get spammy here!)

By doing this, you’re accomplishing two things at once. First, you’re helping the person asking. Second, you’re getting attention to your links, exposing them to countless readers. You’ll be seen as a valuable resource by the community, and people will be more likely to click your links.

Get Interviewed

We’ve found that the easiest way to generate links is through interviews. Every time someone interviews you, chances are they will link to your website. The links will be fairly relevant too as the linking web page will typically talk about your story or what your company does.

I know what you are thinking though…it’s easy for me to get interviewed because I have a well-established brand. And although you are right, it wasn’t easy when I first started out.

During the early days of our entrepreneurial careers, we would continually email two to four bloggers a day who interviewed other people in our space to ask them if they wanted to interview us.

Most of them ignored our email or said no, but then we quickly learned that if we emailed them with feedback on their other interviews, they were more likely to agree to interview us.

For example, if Mixergy did an interview with your competitor, you could email them with your feedback on the interview. You could tell them that the interview was great but also highlight the points with which you disagreed. You would then end the email by asking if they want you to come on the show for an interview.

By using this tactic, you should be able to get one to two interviews a week.

Become an Expert in Your Niche

Sounds like a tall order, right?

But it’s not as difficult as you think.

You can increase your website’s traffic by growing your personal brand. We spent about a decade cultivating our brand. We then used that personal brand to boost traffic and generate high-converting leads, creating several multi-million dollar businesses.

You can do the same. Here is how.

Start sharing your knowledge tactfully and helping others without giving away your business secrets.

First, register at Q&A sites such as Quora, Yahoo Answers, and WikiHow. Join LinkedIn groups, and reach out to other sites in your niche that could benefit from your guest authorship or input.

Start answering questions and helping users. Do not promote your business or link to your website.

If your answers are helpful, users will start requesting your help. When you see help requests coming in, it’s time to strike (in a good way, of course).

From this point on, help people, but link back to your article or site when you do so.

Followers and browsers will follow your link, and your site traffic will multiply like crazy.

Yahoo Answers, LinkedIn, and Quora are liberal with links, but WikiHow has a tough backlinking policy, so be careful. Whatever you do, be polite, and write factual helpful information.

Influencing the Influencers

You may have heard that influencer marketing is dead, but I can guarantee you that if any influencer links to your post, a swarm of traffic will follow.

Now, you cannot overtly approach an influencer and request that person to promote your content. Why? Because the minute the influencer reads your first line, they’ll understand what you want. Honestly, it’s a turnoff.

Influencers receive hundreds of content promotion requests every month. They can spot one from a distance.

Here’s what you can do instead. Influence and motivate the influencer to share your content.

We’ll show you how you can attempt that with an example.

Let’s say we are targeting “men’s fashion” as our keyword phrase. We Googled, “top blogs on men’s fashion.” There are plenty of meaty results:

We visited one top blog, Gentleman’s Gazette, and learned it was founded by Sven Raphael Schneider.

Next, we visited the Gentleman Gazette and Sven Raphael Schneider Twitter page. It looks like he and his team tweet often. (The team has 24,100 followers. It’s a bit low, but there’s a twist in the tale.)

That gave us an idea—a content strategy that can be endorsed by many influencers (with a gazillion followers).

Here’s what we’d do next.

- Find the other top blogs. We’d figure out which bloggers with a similar theme generate thousands of followers on Twitter or Facebook.

- Read their posts/tweets. We’d search for any interviews these people did online to figure out what motivates them in their area of expertise.

- Create an article (or video or infographic). Based on my research, we’d then create an infographic. If we wanted to influence Sven Raphael Schneider, for example, we would create an infographic or write an article based on formal fashion for men for summer. We would stock the items that feature my content in my online store. We could perhaps title it “Men’s Formal Fashion for The Summer Inspired by Sven Raphael Schneider Designs” (or some other designer).

- Reach out to the expert. We would then tweet it to him or post on his FB page. If it appeals to him (and it should, because I spent a lot of time on it and showed passion for the artwork and the writing in making it), he will share it. Suddenly that gains targeted traffic for me that has the potential to convert.

- Focus on good content. Even if he doesn’t retweet it, we know we’re sitting on killer content. Any guys who strut around in formals during the summer are sure to notice it, giving us a clear chance at converting it.

What we have given you is just an example. And it’s only the tip of the iceberg when it comes to the potential of this technique.

Use your creativity to devise even more advanced and informative content within your niche.

You can use other tools, such as Followerwonk or Buzzsumo, to find influencers and apply the same technique.

Boring Niche? Here’s How to Make it More Fun

What can you do in a boring niche?

Can you really make painting homes fun?

If you approach the subject with a notion that it is, in fact, boring, then you probably can’t.

But usually, there are ways to make content at the very least entertaining.

Brian Dean did a great case study of this exact idea. Mike Bonadio, who runs an SEO agency based in NYC, had a client who worked in bug control—boring.

However, he created a high quality infographic on an interesting topic: how bugs can help you defeat garden pests. That infographic got picked up by a few prominent blogs:

Gardening is a related niche for pest control (just as I discussed in the previous section).

But Mike took it a step further by creating “fun” content.

Bugs aren’t supposed to be fun, but he made it fun by focusing on the benefits that bugs can provide.

And you can do this in every niche by focusing on exciting benefits and surprises instead of the boring parts.

For example, do you seal driveways?

Well, that seems boring at first, but what if you created content like:

- How many gallons of sealant would it take to seal Leonardo DiCaprio’s driveway?

- Choosing the wrong driveway sealant will cost you money: A comparison of the true cost of paving a driveway

We’re not so sure that all of those are real things, but the point remains. Turn the boring parts into an important element of a story, but not the main focus.

Back to the case study—how did it go?

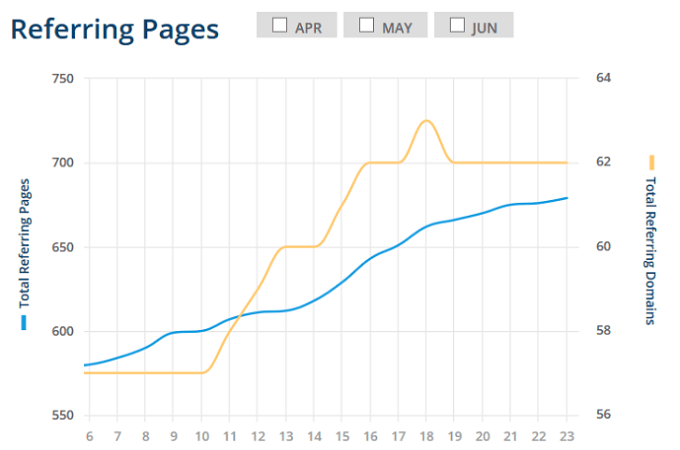

Extremely well, we’d say. After Mike reached out to sites in that related niche, he was able to get over 60 referring domains and hundreds of links:

On top of that, he got over 2,100 views from referral traffic in the short term. His client’s site still ranks #4 for the term “exterminator NYC.”

Can you make your niche interesting to your customers? We know this is difficult and requires some thinking, so let us give you another example: Blendtec.

Blendtec is a company that sells…blenders.

Not exactly a sexy product.

However, you might have heard of their “Will it Blend?” video series.

In these videos, they blend all kinds of crazy objects, like iPhones, superglue, and even skeletons to answer the question: “Will it blend?”

They now get millions of views on each video they produce.

More importantly, those videos get linked to a lot, and those videos link back to Blendtec’s website, which makes them rank highly for all sorts of blender-related terms.

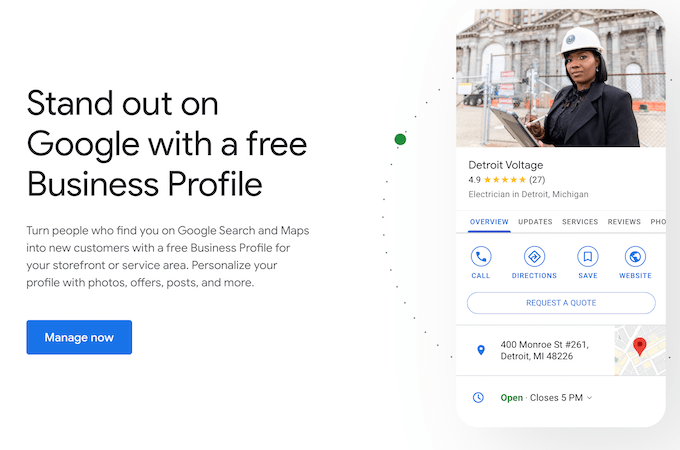

Step 10 – Get Set Up on Google Business

Local SEO is important, especially if you’re a brick-and-mortar business.

If you’ve been skimping on this aspect of SEO, you’ll want to spend a few minutes setting up an account on Google Business.

This allows you to edit the info on your business, and all of it is available for free. You also can verify your contact information, ensuring that customers can reach out to you successfully. Make use of Google Business to add images, monitor reviews, make posts, and more, creating a listing that has unique aspects to set it apart from competitors.

This can give you a huge advantage over competitors who fail to capitalize on this powerful resource.

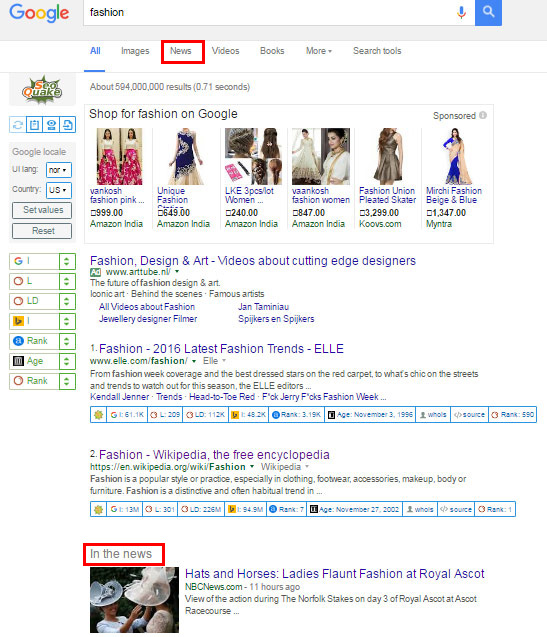

Get Into Google News

News articles get pulled by Google on two SERPs—the traditional SERP you’re used to and the News section.

You may not have thought of Google News as a traffic source, but consider my point. It’s a traffic wellspring!

Check out this screen shot:

To get into Google news requires perseverance, honest reporting, cutting-edge articles, and regular updates.

If you are up to it and want your website to show up on the Google News SERP, here’s what you should do:

- Start a “News” section on your blog/site.

Update it regularly (1-2 newsy posts a day is a good practice). - Publish authoritative, unique, original, and newsworthy content. For research, set up a Google Alert for keywords in your niche.

- Informational articles such as how-tos and guides do not qualify. Every post must be newsy.

- Do not publish aggregated content.

- Every news article you write must be authoritative.

- The byline of each post must be linked to the author’s profile, which should contain their contact information and links to their social media profiles.

- Follow the Google quality guidelines before starting your news section.

- You need to subscribe to a paid Google account to become a Google News Partner because you can’t get in with a free account. The best thing is to sign up for a Google Apps email account, available for as low as $5 per month (https://apps.google.com/pricing.html).

- Finally, start publishing, and enroll as a Google News Partner after building up sizeable content (at least 50 pages).

Yeah, it reads like a slow process, but it’s worth millions!

Step 11 – Use Tools to Outperform Content Marketing

What’s the number one way websites are getting links these days? Content marketing, right? And although that’s correct, it doesn’t mean it is the best form of link building.

I’ve found that releasing free tools in the market place generates more backlinks and traffic overtime. In other words, it is a better investment than content marketing.

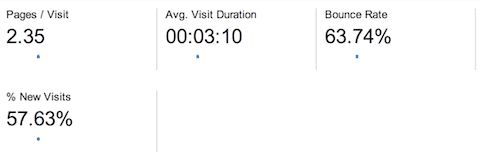

By releasing a free tool on Quick Sprout, we were able to:

- Increase our pageviews per visitor from 1.8 to 2.35.

- Decrease our bounce rate from 74% to 63%.

- Increase our time on-site from 2 minutes 10 seconds to 3 minutes 10 seconds.

- Increase visitor loyalty.

The key with releasing free tools that generate thousands of visitors and links is for you to create something that is easy to use and is high in demand. The best way to figure out what to release is to see which companies are doing extremely well in your space.

Conclusion

Search engine optimization isn’t just a fad that’s going to phase out soon.

It’s something your website needs to concentrate on right now and in the future as well.

If you’re just starting to focus on SEO, you’re a little bit behind, but it’s definitely not too late to implement the strategies I just talked about.

Don’t get overwhelmed.

Start with a few, and move on to the others.

Monitor your results.

Checking your traffic and search ranking will help validate your SEO strategy.

Soon enough, you’ll be making your way toward the top search results on Google.

Who knows, you might even be able to claim that number one spot.